It’s not surprising that AI is already in the field of journalism since its impact is so clearly visible in many other related spheres. In our on-going series with our extraordinary presenters from the Munich-AI Summit 2018, hosted by Develandoo AI Innovation Lab. We’ve taken a deep dive into the essence of the various topics touched upon during the Summit. We’ve introduced so many of our professional speakers whose achievements in the field and innovative ideas truly impressed our audience. One of our greatest talks was devoted to the role of AI in journalism and media, and how AI can be applied to text, image, audio, and video materials.

Our speaker Steffen Konrath is the founder and owner of LIQUID NEWSROOM, a media-IT hybrid. He has a background in marketing, computer science and has been working in management positions (product owner roles) for more than 20 years. LIQUID NEWSROOM or LNR focuses on AI-driven solutions in politics, marketing and journalism and has been recently granted access to Google DNI, a fund that supports innovative product developments in journalism.

Konrath spoke about real use cases; how big players of the media industry are succeeding in using AI. For example, the New York Times, one of America’s leading newspapers with worldwide reach. With the larger number of readers commenting on a given article it’s practically impossible to handle these thousands of comments. How many people they should employ to be able to handle such a huge number of comments?

As Konrath explains, ‘’So we have a team of roughly 40 moderators, and they said only 10 percent of the articles in The New York Times has been open for comments, which means a lot of opportunities interacting with readers was just lost. On the other hand, it’s just crazy to think about what kind of comments you get if you have a comment section on the website. So we actually built a project called Perspective AI, working together with Google to find a method to deal with the toxicity of comments. So we built that machine which actually predicted the toxicity of comments. So moderators actually knew what kind of comments had to be done manually, and the rest was just passed through fast. That’s one of the examples where we can see the media on a decision like the New York Times can use natural language processing, in that case, to be faster and to be more working with the readers.’’

One of the use cases described was the one where AI can be trained to recognize and sort text. Additionally, Konrath also spoke about AI interactions with images and videos, drawing from the example of ISIS beheading pictures, which he called a nightmare. Working for hours with this horrific kind of content could easily make a person mad. It could cause serious mental harm.

‘’Now looking at that kind of examples and looking at that kind of images and videos, can we use machine learning to do the work instead of a human? Yes, of course, we can. So we can teach a machine to find beheading. People experimenting in looking at the image already know what’s in the picture, but the machine was trying to understand what kind of objects might be in the image.’’

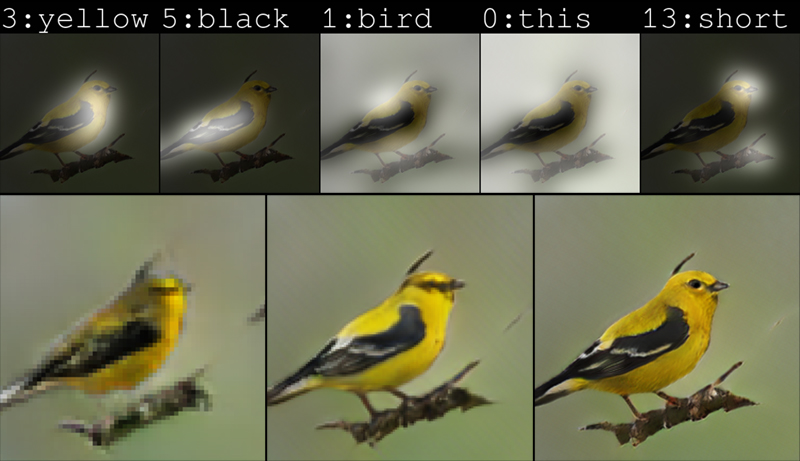

Konrath asked the audience a question; can text be used to generate images? And he explained that it’s possible due to generative adversarial networks.

‘’If you think about image market as a billion dollar market that’s quite interesting. Because each time you put out a blog post or check the prices for images, get images, it’s something about 50 euros to upwards of 400-500 euros. That’s a huge amount of money each time you have to try to publish something; you have to get a license for the pictures. So what if a machine could understand the text you’ve written and create the image as implied by your typing. That’s good stuff, isn’t it?’’

He mentioned a use case with Microsoft, where researchers have built a bot that draws what a human person tells it.

What refers to AI interaction in regards to a video? Konrath spoke about a quite well-known case with a video of US ex-president Obama and a speech he made which turned out was fake.

‘’So this is one example where obviously Obama seems to say something on YouTube and it looks quite natural, doesn’t it? So, in this case, the researchers just put some words into his mouth using that kind of recognition of mouth area, putting some completely different texts on his mouth. That’s scary, isn’t it? So, this is something interesting we need to take care of because building such models is quite easy. So we face the problem of how we can distinguish the material which has been faked in the future. It’s a really nasty thing if you’re not controlling your face. Yeah, I think that’s pretty nasty, but you can damage people. That’s an ethical question as well,’’ Konrath explains.

What’s interesting, Konrath also showed an example of how the entertainment industry can be affected. Virtual reality. Where the technology is used to be able to interact with someone in the same space but who doesn’t actually exist.

‘’And as a last note to the starters, if you think about building a business in this sphere, I think there are amazing things you could do especially with generative adversarial networks currently coming up, so many things which can be done and can be experimented,’’ concluded Konrath.

You can find the presentation video of his talk below as it’s definitely worth checking. You can also find the full videos of other prominent speakers at our AI summit by visiting our YouTube channel.

- Topics:

- Artificial Intelligence

- M-AI Summit